About Me

I am a graduate from the University of Florida with a Bachelor’s in Aerospace Engineering and a fresh Ph.D. student in Aeronautics & Astronautics at the University of Washington. My experience spans:

- Two industry internships with Blue Origin working on controls & navigation engineering.

- Four internships with NASA covering GN&C and software engineering research.

- Undergraduate research with UF’s ADAMUS Laboratory.

Follow my experiences for yourself through this detailed portfolio of my contributions and accomplishments! If you are interested in connecting I can be contacted by emailing buckner.c.samuel@gmail.com.

(All media shown has been approved for public distribution)

Internships

GN&C Autonomous Flight Systems Intern

NASA Johnson Space Center Pathways Co-Op Program

Summer 2020

Houston, Texas

Overview

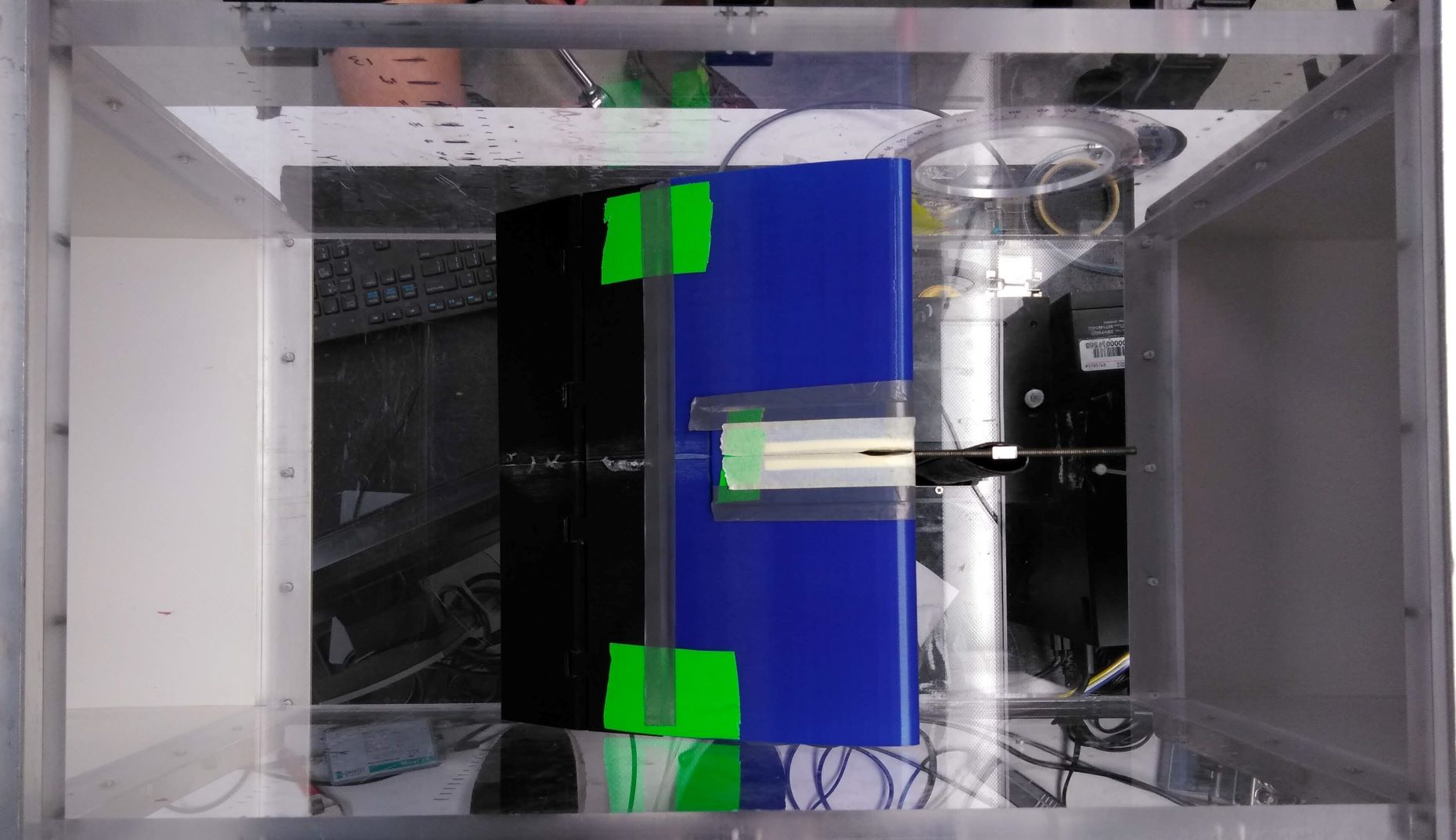

For my third and final Pathways tour, I was tasked with furthering development on the ROSIE (Rendezvous Operation Sensor and Imagery Evaluator) robotic testbed. This vehicle’s purpose is to support rendezvous and docking GN&C testing and sensor performance evaluation with quick feedback and capable 6 DOF (degree of freedom) motion emulation. ROSIE is designated to help support the rapid development and testing deadlines necessary for Orion and the Artemis program’s success, as well as for any other future missions requiring precision relative orbital motion testing.

Contributions

During the course of my internship, I successfully:

- Upgraded ROSIE’s C++ codebase with improved 6-DOF PID control, trajectory sequencing, sensor integration, noise filtering and a PyQt5 GUI analytic toolkit

- Developed LiDAR collision detection and retroreflector cluster identification algorithms with PCL (Point Cloud Library) for docking relative navigation

- Led ROSIE performance testing campaign with OptiTrack motion capture feedback and Velodyne LiDAR payload through extensive test matrix validation

More information on ROSIE and its prior applications to the SEEKER project can be found here.

Side Project: Orion 3D Visualization Library

I also worked on a side project to validate an Orion 3D cockpit graphical display C-library for lightweight CAD models. I initially developed an automated MATLAB geometric modeling tool, then used MATLAB MEX functions to interface with and test-drive the library with a highly-configurable GUI and performance diagnostics. Using these tools, I was also able to significantly refactor the C codebase, reducing computational power usage and improving display refresh speed.

Test Demo #1: 6-DOF Multi-Waypoint Diamond Sweep

Test Demo #2: 6-DOF Emulated Rendezvous Maneuver (with LiDAR cluster detection)

Lunar Lander Trajectory Simulation Intern

NASA Johnson Space Center Pathways Co-Op Program

Spring 2020

Houston, Texas

Overview

For my second Pathways tour, I worked with the COMPASS (Core Operations, Mission Planning and Analysis Spacecraft Simulation) software team in collaboration with the JPL DARTS Laboratory. This group helps develop safety-critical software for flight operations analysis in the ascent and EDL (entry, descent and landing) flight regimes and was recently selected for 2020 Software of the Year at Johnson Space Center.

Contributions

My primary task was to develop, analyze, test and SVN deploy a lunar lander ascent-phase simulation from scratch to support HLS (Human Landing System) development for the Artemis program, complete with the following features:

- A custom LTG (linear tangent guidance) steering law to orbital insertion

- High-fidelity sensor/actuator models and spatial frame management

- Accurate vehicle mass characteristics and propulsion configuration

- New tools for orbital insertion and propagation simulation and analysis

Furthermore, I assisted with development of custom graphics and visualization libraries suited for the software limitations that the COMPASS team had to deal with. As a side task, I also helped develop an IIP (instantaneous impact point) trajectory propagation tool to support vehicle ascent analysis for the FDO flight control team. More information on COMPASS can be found here.

Fit2Fly Research Associate

NASA Academy at Langley Research Center

Summer 2019

Hampton, Virginia

Overview

In the Summer of 2019, I worked with the NASA Academy, a group of 16 undergraduates assigned to collaborate on one of two major center-wide research projects: Hercules and Fit2Fly. I worked specifically with the Fit2Fly software team, with the goal of developing a proof of concept feasibility simulator from scratch to model business ecosystems for large-scale UAS (unmanned aerial system) enterprises. My specialty on our team of three regarded research on the internal core SWIL (software-in-the-loop) commercial operations simulator interfacing with ArduPilot and QGroundControl open-source software. I was also the acting liason to the hardware team ensuring our simulation work could properly translate to real-world UAS testing.

Contributions

I developed a multithreaded Python architecture for multi-UAS mission management consisting of uploading waypoints, performing takeoff and landing, updating flight parameters and injecting simulated weather events when desired. Real-time clock synchronization was closely managed between Fit2Fly processes and ArduPilot SWIL instantiations to ensure accurate simulation. I further developed a modified software version with rerouted TCP connections and realistic flight parameters to drive SRD-280 drones for netted flight demonstration. During research, I wrote software tools for tasks such as HTML web scraping and XML parsing/reconstruction. I went on to present the project and our research on behalf of the team at the AIAA 2020 SciTech Conference (conference publication found here).

Time-lapse of simulated pizza deliveries to a local neighborhood

Precision autonomous takeoff and landing tests for a single SRD-280 drone

As a side-task for Fit2Fly, I also worked on computational methods for identifying UAS (unmanned aerial system) vehicles based on their RF (radio frequency) FHSS (frequency hopping spread-spectrum) signal patterns. Two methods were proposed and handed off for further development.

Method #1: Binary Blob Detection

This first approach saturated values to binary one or zero based on whether signal strength had exceeded a given threshold or not. Large enough clusters of ones were identified in real time as frequency hops and marked with red circles for visualization as seen in the below waterfall plot (tested on SRD-280 radio transmitters with an RF explorer spectrum analyzer). While computationally fast and efficient, this method showed possible unreliability with complex patterns and sensor noise, leading to investment in method #2.

Binary blob detection validation test

Method #2: Image Classification

For more robust FHSS identification, image classification (machine learning) with PyTorch was selected as a possible solution. Simulated data with random noise was developed for large data-set validation, with select augmentations used including random cropping, center cropping and resizing. A prediction accuracy of 98% was found, with a sample set shown below, although this method has yet to be implemented in real-time with the SRD-280 radio transmitters due to time constraints.

Integrated GN&C Analysis Intern

NASA Johnson Space Center Pathways Co-Op Program

Fall 2018

Houston, Texas

Overview

In the Fall of 2018, I assisted the NASA CCP (Commercial Crew Program) with integrated GN&C analysis for the SpaceX Crew Dragon. The work focused on the oncoming implementation of new GN&C algorithms for the ascent LAS (launch abort system) in early atmospheric flight.

Contributions

I conducted in-depth trade studies for these algorithms and their performance, relative to their predecessors, in NASA’s (Unix-based) 6-DOF Trick simulation environment, and further developed various MATLAB/C++ unit tests and debugged various problems in the simulation source code. Handoff results were used by other GN&C teams and targeted quantifiable understanding of underlying mathematical relations and their influence on the vehicle’s overall trajectory and design parameters including aerodynamic, propulsive, structural, etc.

Timed parachute deployment algorithms were analyzed both in controlled, independent simulations and Monte-Carlo (MC) dispersion analysis. The latter revealed potential hardware failure scenarios in off-nominal cases. Custom Python data processing and analysis tools were also developed for this task, the next task and general use in EG4.

Linear-Optimized Control Allocation algorithms were also analyzed, looking deep into the impact and interrelated dependencies of weighting parameters on flight performance. Numerous weight sets were fine-tuned for a variety of different use-cases. Detailed comparison across the entire flight regime was also made relative to the previous method which used more traditional control allocation logic.

SpaceX Crew Dragon – Pad Abort Test

SpaceX Crew Dragon – In-Flight Abort Test (IFA)

Research

D3 Flight Software Lead Developer

UF ADAMUS Laboratory

Spring 2019 - Fall 2020

Gainesville, Florida

The Drag De-orbit Device (D3) CubeSat Mission

Since the Spring of 2019, I have worked as the lead flight software engineer to develop the first flight-ready ROS (Robot Operating System) based flight software for the D3 CubeSat mission. The D3 contains four deployable booms for control of aerodynamic drag to: (1) reduce orbital lifetime, (2) de-orbit point target and (3) perform collision avoidance. Our software has also recently been picked to support the PATCOOL CubeSat mission in addition to D3. My dedication to the project has included working part-time during internships as well.

Main Contributions

- Developed software modules for radio telemetry links, GPS navigation, finite-state handling, uplink command processing, onboard software updates and failsafe reboots

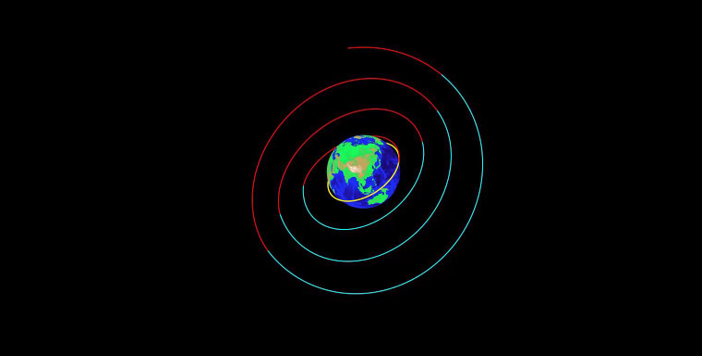

- Created C++ orbital simulation/propagation software to simulate guidance tracker

- Performed guided trajectory analysis involving LQR (linear quadratic regulator) and EKF (extended Kalman filter) performance for guidance tracker validation

- Led team scheduling, trained new members and prepared test plans for three-layered SWIL (software-in-the-loop), HWIL (hardware-in-the-loop) and FlatSat testing

I have also first-authored both a paper and poster regarding the flight software submitted for presentation at the 2020 SmallSat Conference (found here). The software has also been recently picked up for use on the PATCOOL mission in addition to D3, with both CubeSats targeting NET October 2021 for launch from Cape Canaveral.

Spacecraft Formation Flight Researcher

UF ADAMUS Laboratory

Spring 2018 - Fall 2018

Gainesville, Florida

Spaceflight Trajectory Reconfiguration

During the Summer and Fall of 2018, I assisted in software development for the relative configuration of spacecraft in formation maneuvers under Post-doc guidance.

My first task revolved around developing a simple Simulink model to simulate the relative dynamics of two satellites (chief and deputy) under J2 perturbations for independent validation of pre-existing code.

I then went on to implement an extended RT-RT (radial-tangential radial-tangential) burn control algorithm based on the Clohessy-Wiltshire equations as a modification to a pre-existing Simulink model. Use of this burn control approach allowed for a relative trajectory that would fit within the physical constraints of the laboratory’s air-bearing surface for testing with custom-made orbital emulation robots (successfully tested, however no video was taken of the test).

Orbital emulation robot validation test

Projects

Vertical Takeoff & Landing (VTOL) Hopper Vehicle

Independent Hobby Project

Spring 2018 - Present

Misc

Overview

In the Spring of 2018, I began an ongoing project to develop a VTOL hopper vehicle equipped with:

- A Teensy Arduino microcontroller

- A propulsive EDF (Electronic Ducted Fan)

- An IMU (Inertial Measurement Unit)

- Four servo motors for nozzle actuation

Details

The system is designed to be 6-DOF capable, with a gimbaled nozzle for pitch/yaw control and two fins located directly below the EDF for roll control. A four-bar linkage solution to the nozzle-servo kinematics was derived and modeled in MATLAB and fed into the C-based flight software for precise servo-to-nozzle actuation mapping.

This project is currently on hold, however I plan to return to it sometime in the near future.

MATLAB nozzle-servo kinematic solution

A prototype test-stand was 3D-printed and assembled to test nozzle actuation in response to IMU readings. Precise motion has been confirmed at a maximum deflection angle of 24 degrees, however further design and analysis is desired before moving to the flight-ready prototype.

Nozzle “square-sweep” motion demonstration

VP Internal / CanSat Structures Lead

Space Systems Design Club

Fall 2017 - Fall 2019

Gainesville, Florida

Overview

During the 2019 year, I acted as the internal Vice-President for the SSDC (Space Systems Design Club), managing competition and project participation including CanSat, Micro-G Next and the gyroscopic chair outreach project. Furthermore, I acted as the lead structures and dynamics engineer for the 2017-2018 CanSat competition team, which required development of a small “re-entry vehicle” which must stabilize under chaotic rocket deployment, eject parachutes and collect telemetry readings of the surroundings while protecting a delicate payload.

CanSat Contributions

To bring the concept to fruition, I personally made several key contributions, including:

- A multi-stage spring-deployable ABHS (aero-braking heat shield) frame for aero-stabilization

- Mass savings analysis conducted through theory and SolidWorks FEA (Finite Element Analysis)

- MATLAB numerical models to optimize ABHS deployment and simulate drop-tests

I also single-handedly managed successful drone drop-test preparation and execution with the UASRP Lab, including assembly of a custom deployment fairing. Despite a successful PDR/CDR campaign, the team was unable to compete due to major delays from corrupted electronics/PCB files, however a successful competition-ready design was still fulfilled under a $650 budget.

Featured Coursework

Overview

During the Fall of 2019, I led a team of three for an EAS4810C final project to quantify the performance of MTE (morphing trailing-edge) airfoils in a subsonic wind tunnel. A NACA0012 configuration was tested at Mach 0.045 with:

- 10∘, 20∘ Trailing-edge Deflection (both Aileron and MTE)

- -10∘< Angle of Attack <10∘

Contributions

I developed ~1000 lines of MATLAB code for the project for two purposes. First, to facilitate extraction of desired airfoil designs based on the referenced 14.4∘ design (see the algorithm flow-chart above). Second, for an analysis toolkit to apply wind-tunnel corrections and derive desired results.

Furthermore, I was entirely responsible for the CAD design, including an internal hinge with a small form-factor capable of withstanding aerodynamic loads and a modular, 3D-printable design architecture for quick part interchangeability in the tight time frame for testing.

Below, results are shown displaying improved performance for MTE configurations over ailerons, particularly at higher angles of attack.

EAS4810C Aero Sciences Lab

Morphing Trailing-edge Airfoil Wind Tunnel Testing

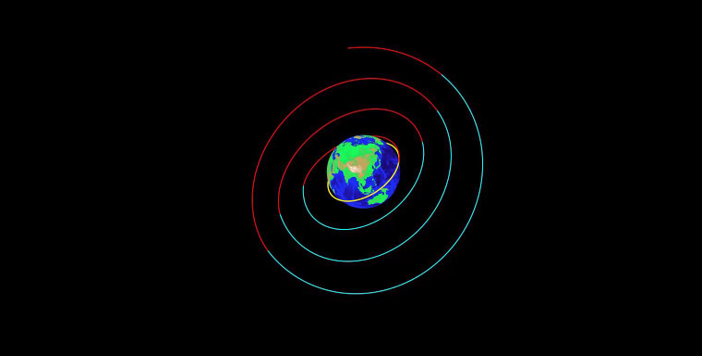

Overview

For my EAS4510 astrodynamics class, the final project dealt with modeling a non-planar N-fractioned two-impulse Hohmann transfer maneuver. This project and material was so interesting that I decided to make a visual animation of the maneuver in simulated time (recorded to YouTube, link here). Due to my passion formed from this class, I will be taking a graduate-level Advanced Astrodynamics course in my final Fall 2020 semester at UF!

EAS4510 Astrodynamics

Fractioned Two-Impulse Hohmann Transfer Maneuver

Sensor Fusion Analysis With YOLOv5 Object Detection

[CSE455] Computer Vision - Final Project

Video Project Summary

Abstract

Sensor fusion is a hot topic in the world of spatial perception — In this project, we sought to develop an early fusion object detector (4-channels with RGB and depth information from camera/LiDAR sensors) and assess performance relative to the unfused 3-channel RGB and 1-channel depth models that are more traditionally seen in object detection research. Consequent analysis showed promising results for this fused model that could motivate further research and implementation in perception-based navigation systems for autonomous vehicles in adverse conditions.

Project Summary

We are PhD students in the Aeronautics & Astronautics department with the Autonomous Controls Laboratory (ACL). Our lab has a high fidelity motion capture system as well as quadcopter and rover (“puck”) fleets (~30 of each). Here is more context on the use case of the rovers. Using these resources, we are interested in developing perception capabilities for future research in autonomous robotics. To this end, we want to create an object detector to identify ACL pucks, and identify where optimal performance lies between a 3-channel RGB and a 1-channel depth map input from an egocentric perspective.

Dataset Generation

For the baseline training and testing data, we collected 1,102 visual and depth pairs of images consisting of 2,670 instances of Pucks in the ACL motion capture studio using an iPhone 12 Pro Max’s dual camera and LiDAR sensor system. It is ideal in capturing a multi-modal dataset in that is provides both depth map and visual imagery, both calibrated and rectified, meaning that there is a 1:1 pixel correspondence between the depth map and the visual image. The Record3D application was used to do this as it was the only application which supported recording simultaneously from both sensors. It provides imagery which has a visual and corresponding depth map stitched together as seen to the left.

All dataset imagery was captured from an egocentric (puck) viewpoint in well-lit conditions in the ACL motion capture studio. We specifically collected two separate videos and then split the video into images by taking every 6th frame. These were then used to create the following three datasets:

- Visual-only (3 channel RGB input)

- Depth-only (1 channel grayscale input)

- Fused visual & depth (4 channel concatenated input)

Each dataset above was then randomly split by a 30-70 factor into test and training datasets. Additionally, 10,080 visual-depth pairs across 10 situational test cases were collected for further analysis of our model — this is discussed further in the Situational Testing Results section.

Techniques

Pre-processing

Throughout the pre-processing of all our datasets, we used several command line tools. FFMPEG was used for splitting the videos captured by Record3D into still images. We also used the Magick tool for splitting apart the visual images and depth map.

For annotation, we used Supervisely for annotating our dataset imagery. It provides a web-based labeling interface with object tracking algorithms (as described by this paper) to assist in the labeling task. While all of the annotations were quality checked by us, only 106 out of the 2,670 annotations were manually made and the remaining were created via the tracking algorithm. Since there is a 1:1 pixel correspondence between the depth map and visual imagery, we used the same annotations for the visual-only, depth-only and fused datasets.

We also wrote a python script to concatenate the depth map onto the visual image to make a 4-channel RGB-D image (see fused_and_reorg_dataset.py). To the right is an example of what this looks like for a PNG (the fourth channel for depth is displayed as the “alpha” channel for visualization purposes).

Network

YOLOv5, which is largely based on YOLOv3, was chosen as the object detection network for this project, as it is a relatively fast and accurate detector and has a well supported codebase. However, we modified the code to work for 4-channel images. The primary modification was, in every case that cv2.imread(img) was called, we added the flag cv2.imread(img, cv2.IMREAD_UNCHANGED), which allowed for the correct number of channels to persist. Additionally, there were a few cases where 3 channels inputs were hard-coded and needed to be changed.

The following training parameters were considered:

- Augmentations/Hyperparameters: We used most of the same dataset augmentations mentioned in the Bag of Freebies section in this paper. Notably, we did not use any augmentations related to HSV augmentations as these were reliant on having 3 channels.

- Batch Size: We chose the largest batch size that our GPU would allow. We used a Titan RTX for the training of our models which has 24 Gb of VRAM. As the native resolution of our imagery (720 x 960) was not a multiple of 32, as needed by using a stride of 32, we trained at a resolution of (736 x 960) with which we were able to use a batch size of 100.

- Epochs: We trained for 30 epochs, as this achieved suitable mAP (mean average precision) performance for our models. Training for longer could lead to overfitting of the model.

- Model Architecture: The YOLOv5 has several different model architectures to choose from. We chose to use the V5s architecture as it is light-weight enough while still providing acceptable detection performance to run on edge hardware (which the ACL pucks use).

Post-processing

- Converted fused annotation output frames into a video.

- Horizontally-concatenated annotated visual and depth map inference testing videos.

- Vertically-concatenated the above two videos together.

- Applied an overlay to the video showing transparent labels for each.

Baseline Training & Testing Results

We used Weights & Biases to monitor the mAP, Precision and Recall metrics throughout the training process.

Objective Confidence Loss: In both the test and training set’s objective confidence losses, the visual-only and fused datasets were very close to each other, and both outperformed the depth-only model.

Bounding Box Loss: Similarly, both the visual-only and fused models outperformed the depth-only models in the bounding box loss for both the testing and training sets.

mAP@0.5:0.95, Recall, Precision: We can see similar results for mAP-0.5:0.95, mAP-0.5, recall, and precision, in that the visual-only and fused are nearly the same and both outperform depth-only.

Situational Testing Results

In addition to performing detailed analysis with test frames taken from the same video as the training data, we also sought to assess performance across 10 more challenging “situational” test cases. A brief explanation of each test case is given below. Note: all of the cases with the exception of the domain shift were captured in the ACL Motion Capture Studio.

-

- Drone Decoy: Our dataset only includes a puck class and, furthermore, only imagery of pucks close up without any other puck-sized objects in the immediate vicinity representing true-negative cases. To determine how resilient our models were at rejecting false-positives, we collected imagery when a drone was also in frame and near the pucks. This is an especially tricky case for the network, as both the puck and drone share some of the same circuit boards, so there are some visually-similar features between the drone and the puck.

- Crashed Puck: All of the pucks imaged in our dataset were fully assembled, however we may want to identify pucks which are broken and may not visually resemble the original puck. To this end, we used a puck which was broken into several pieces to see how our models performed when the puck was in a broken state rather than fully assembled.

- Tumbled Pucks: All of the pucks imaged in our dataset were upright in their operating state, and so we wanted to determine how our models performed when the pucks had fallen over. Similar to the above test, we collected imagery of our pucks in a tumbled state.

- Overhead: Since our dataset was collected from an egocentric viewpoint (i.e. from the ground), we sought to find how robust our models are to a change in viewpoint, so we collected imagery from an overhead viewpoint.

- Puck Swarm: We only included, at a max, four pucks in one image simultaneously in our dataset. To this end, we wanted to determine if the model performance was affected when significantly (>30) more pucks were in frame.

- Solid Occlusion: In our dataset, there were only cases of occlusion when one puck occluded another or one puck was partially out of the frame. We wanted to see how our model performance was affected when solid obstacles were introduced into the environment to provide occluded imagery.

- Diffuse Occlusion: We also wanted to see how the model performance was affected when the pucks were occluded by a net to represent a diffuse occlusion setting. A practical setting where a case like this might be encountered in looking through vegetation or branch-like objects at another puck.

- Low light: Tested model performance when lighting within the studio was partially-diminished.

- Very low light: Tested model performance when lighting within the studio was fully-diminished (except for the blue light emitted by the motion capture cameras).

- Domain Shift: Since our dataset was collected entirely in the ACL Motion Capture Studio, we wanted to determine how the performance of the three models would be affected by a domain shift. To this end, we collected imagery from a puck on grass outdoors.

Using aforementioned post-processing tools, all 10 test-cases are demoed below.

Test Demos

Drone Decoy Test

Crashed Puck Test 🙁

Tumbled Pucks Test

Overhead Test

Puck Swarm Test

Solid Occlusion Test

Diffuse Occlusion Test

Low Light Test

Very Low Light Test

Domain Shift Test

From the above videos, we created our own qualitative assessment of each model for all 10 test cases as shown below. It is important to note that, for a more detailed assessment, it would be desirable to perform an mAP analysis as seen in the previous section, however this would also require providing instance annotation for all 10,080 visual-depth pairs which would be a time-consuming task. Interpretations of performance also require some context as to which metrics are most valuable for a given task, e.g. precision vs recall. In our case, here are a few of the things we looked for in determining performance:

- How often does the model fail to detect pucks? Are these brief failures over a few frames or consistent failure over many frames?

- How often does the model detect erroneous objects as pucks (false-positives)? Are these sub-detections (e.g. detecting an omniwheel as a puck) or detections of completely unrelated things?

From the above, we can make a few direct observations.

First, the fused sensor model does appear to see improved performance compared to its separated counterparts. Visual-only performs quite closely, with depth-only seeing quite drastically worse performance overall.

Second, and perhaps most importantly, the fused model displays more robustness and well-roundedness, only experiencing below-average performance in one test while the other two models experienced below-average performance in three tests each. This is particularly important for an autonomous system such as a terrestrial robot or self-driving car which may be exposed to a variety of different environments and situations. For example, said self-driving car might drive through a tunnel and experience low-light conditions, or experience a domain shift driving through a rural farm as opposed to an urban city.

Conclusions & Further Work

As discussed in the previous section, it would seem there are some interesting benefits that the fused visual-depth input and objection detection model can provide in terms of robust and well-rounded performance for object detection across an array of possible situations that an autonomous vehicle may encounter.

The main problem found during this project was simply a lack of time. We had actually started development of our own custom PyTorch YOLO-based object detector, including loading the 4-channel data into a PyTorch dataset (which, by itself, was a very cumbersome task) and building out a class to design model layers based on YOLOv3’s configuration, but soon found that the task of building out the entire architecture was not feasible in this time frame given the other tasks we needed to accomplish, which led us to instead import and use YOLOv5.

We also had a goal of porting our software onto an NVIDIA Jetson AGX Xavier and attempting to run the detector real-time mounted with this hardware a puck (along with the iPhone), as well as implementing a simple trajectory generation algorithm, however we were unable to get to this as well. That being said, both of these tasks present opportunity for future work, with the former allowing for deeper customization of the network, and the latter allowing one to test the object detector pipeline on robotic hardware in a given environment. It would be interesting to perform a deeper analysis of how this compares to the more standardized methods of visual/RGB object detection and depth-based object detection. There is also a plethora of other topics to explore in the world of sensor fusion, as outlined by this fascinating survey paper.

As two graduate students who are very interested in the world of spatial perception applied to autonomous systems, we may even be able to make use of this project to perform some of these furthered research objectives ourselves!

References & Useful Resources

- YOLOv3: An Incremental Improvement (Redmon, Farhadi)

- YOLOv4: Optimal Speed and Accuracy of Object Detection (Bochkovskiy et al.)

- Deep Multi-modal Object Detection and Semantic Segmentation for Autonomous Driving: Datasets, Methods, and Challenges (Feng et al.)

- OTA: Optimal Transport Assignment for Object Detection

- Ultralytics YOLOv5 GitHub Repository

- Implementing YOLOv3 Using PyTorch